|

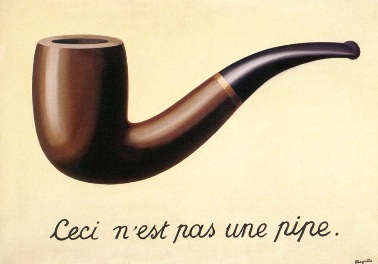

| It is a (two-dimension) representation of a pipe. |

The map is not the territory, but in software engineering terms they are models of it. A frequent remark about models is that they are all wrong, as they eliminate many aspects of what they refer to. Big efforts can certainly go very wrong if they don't consider something that turns out to be very relevant, but models in general try to focus on a few aspect that are relevant for a particular job to be done. That's what makes them useful despite their limitations.

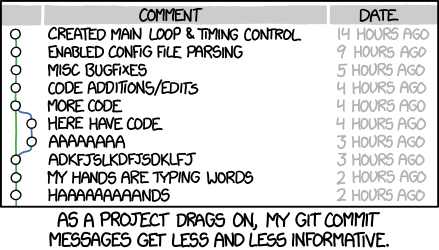

If this philosophy was more established, we would spare software engineers from many an effort to keep a visual model completely up to date with code that is changing at a rapid pace. The continuous invalidation of a diagram leads to the conclusion that it's not abstract enough, or that it's the symptom of other larger problems.

Coming back to the map metaphor, geographical maps emphasize one primary aspect, generally mapped across two dimensions. For example, elevation or depth with respect to sea level:

Like in Wardley maps, architectural maps can use meaningful spatial dimensions, even if they don't correspond to a physical location like the squares on a chess board or a projection of the world. In a Wardley map one key dimension is visibility (to the user) as they capture a value chain where products use components which use other components or utilities.

Unlike the modeling happening in Domain-Driven Design, I'm referring mostly to models of the software itself here rather than models of the domain; domain models in diagram and code format are just one specific example of the activity.

Which maps does a team need?

To answer this question, consider the goals for a team to:- identify and capture what "good" looks like for them in this project

- easily see divergence from that to correct it

- take fewer decisions over and over; reuse patterns that have emerged, and have been tried and tested multiple times already in similar changes

Some would call this documentation, or diagrams, and they would try to fit into some formal notation to be completely unambiguous if these were published in some application for funding. These maps are lightweight, they are only meant to be internally used by a team, and there is no formal notation as the barrier to entry (much like Eventstorming involves everyone in a room with only a set of orange stickies).

I've seen a basic Miro palette emerging depending on what the team is comfortable with:

- boxes and arrows, with a couple of style of arrows if necessaries

- stickies to capture particular decisions (text)

- color coding to track status (e.g. adopted as best known practice, team fully endorsed it, deprecated/suspicious)

Substitute your favourite digital whiteboard products. I have not attempted to do this in a office setting. I suspect Miro lowers the cost of change to make this feasible, both in a sense of not churning through paper and markers and in a sense of having a simple enough UX that can be picked up in a day to allow contribution.

The first one of this maps replaced an attempt at maintaining Architectural Decision Records. Software engineering involves continuously taking hundreds of decisions, in different places, at different scales. I suspect ADRs cover the very high level perspective, or consequential decisions; but they don't scale to a high number of decisions that delve into the inner workings of a smaller module without reaching line-of-code level. Different abstractions for different purposes.

ADRs are also immutable and go through a deprecation and superseding process. These maps are supposed to be mutated all the time, for refinement. The continuous application of new user stories applies the pressure to revisit decisions to better suit what we now one or two years after a product was created.

Some real world maps covering the same project

To keep scaling to a large number of decisions, I started classifying them into various different maps segregated by a specific aspect. There's no right choice on how to organize information into a hierarchy, but there always was a cognitive load limit on how many decisions can be quickly grasped or considered when looking at a particular component.

In more formal approaches these separate aspects are called views or viewpoints. In any situation they might arise to support a specific discussion rather than just because there wasn't enough space in another map. The hard step is possibly to move from one model to multiple models, while maintaining the same attention and ownership from the team.

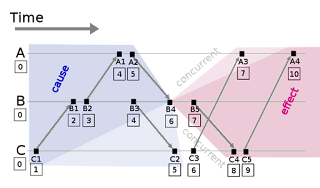

Here are some examples. The main dimension has usually been user visibility, but from left to right rather than from top to bottom like in Wardley maps.

The context around $productName

Includes no details about the internals of the system being worked on, but only other projects or organizations that the current system integrates with. Many things are out of the control of the team in this map. They are also often not visible in code at all, including for example a list of clients of our API.

I took this name from the C4 model's system context diagram.

Bounded Contexts and their languages

Where does a vocabulary apply consistently? For example, do we have a UX language to cater to users and a separate, symmetrical and consistent language for the underlying domain model? And how do they differ from the languages used or imposed upon us by third parties? Are we conforming to another team's language, or introducing anti-corruption layers?

I took this name from strategic Domain-Driven Design.

Static architecture

The dependencies between different modules of the codebase. Imports, requires, use statements depending on your language of choice will ultimately define this. It's a static map because this information can be detected and distilled into the map without running any code. There are also additional decisions that are not represented in code: which committed folders are modules with a strong interface, and which is just a folder?

A key map to foster cohesion (inside a component) and keep coupling under control (as it makes high-level dependencies visible).

Testing map (or testability map)

What testing strategies are used consistently in any application layer? Unit testing classes or functions? Screenshot reference testing for the UI? What integrated tests are we using and what choices have been made for their setup or assertions?

Observability map

What modules produce useful logs and how can I access them? What is the difference between an error or a warning level log? What dashboards (sometimes disparate) we should link to? An entry point more than something that can visualize data directly, often linking to disparate tools from Grafana to Kubernetes dashboards.

Technology stack

Answers questions on specific of various programming languages and tools. Does not need to capture what can be enforced via into linting rules instead, but the set of decisions includes more than just conventions:

- what safe subset of JavaScript or Typescript are we endorsing for usage?

- What we will use to represent URLs or dates?

- How do we mark deprecated code intended to be replaced?

The choices made here often have security, performance or other non-functional implications.

Process and ownership

This was not a complete list! The set of maps should be owned by the whole team that uses them, not by its tech lead only. Decisions on retirement of maps can then be taken together.

Often, though the tech lead role has their senses oriented to detecting when a new map could be helpful; proposing its adoption at the right moment; and intentionally not filling it in completely to co-create it with the rest of the team.

As part of the development process, the team self-organizes themselves to pick up user stories, and often has a refactoring checklist that they want to achieve before closing off their unit of work and move onto something else. Conceptual breakthrough might also have happened as part of delivering value, like a new data structure having been identified and tested successfully. While most of the notes will be deleted as the team moves on, some can be captured by refactoring the code to follow our understanding. And some are hard to fit at that level and can be captured by maps.

Often items are marked with a specific color, indicating a pair or a subset of the team has stored these decisions but there is some catch up to do with the other team members so that they are all aware of the direction.

Speculations

A gap I would have liked to be filled regards explicitly referring to maps at the beginning of a new unit of work, at some granularity. For example, consulting them when a new epic or new user story is prioritized.

The results might be various:

- speeding up as fewer decisions have to be taken, and maps constitute an enabling constraint. I've seen this happen empirically more by referencing existing code. There is a trap waiting as developers might pick an outdated item to copy from, and they rely on memory to come up with a recent reference rather than the old button no one has not touched in the last couple of years.

- invalidation of decisions. New business requirements may require a change in architecture to support them, hopefully infrequently.

- refinement. Some aspects we might find out of date, or obsolete, and maps could be simplified as a result.

One way to look at this process could be evidence-based: can we work within the existing architecture to deliver, or will we fail to do so? A spike can help us understanding which one is the case. However, failure is not binary: we might never encounter an absolute blocker to delivery, and yet spend sweat and tears before deciding a new approach is needed.

.svg.png)